The sense of smell is often unfairly overlooked, but it is becoming more and more obvious that it’s essential for a huge number of really important functions. Recently, the average human nose was estimated to be able to discriminate between more than 1 trillion different smells, each of which are mixtures of odorant molecules recognised by the nose. Of the ~24,000 genes in every person’s genetic make-up, around 400 code for smell receptors that reside in the nose. These receptors bind to these odorant molecules and trigger neural activity to the brain.

Our sense of smell – or ‘olfaction’ – has long been known to be closely associated with our emotions. Strongly emotional memories often involve elements of smell (e.g. the smell of sun cream reminding you of holidays, or associating the smell of cut grass with sports day), so perhaps it isn’t surprising that a lot of emotional relationships can be helped (or hindered!) by our sense of smell too.

Sniffing out friends

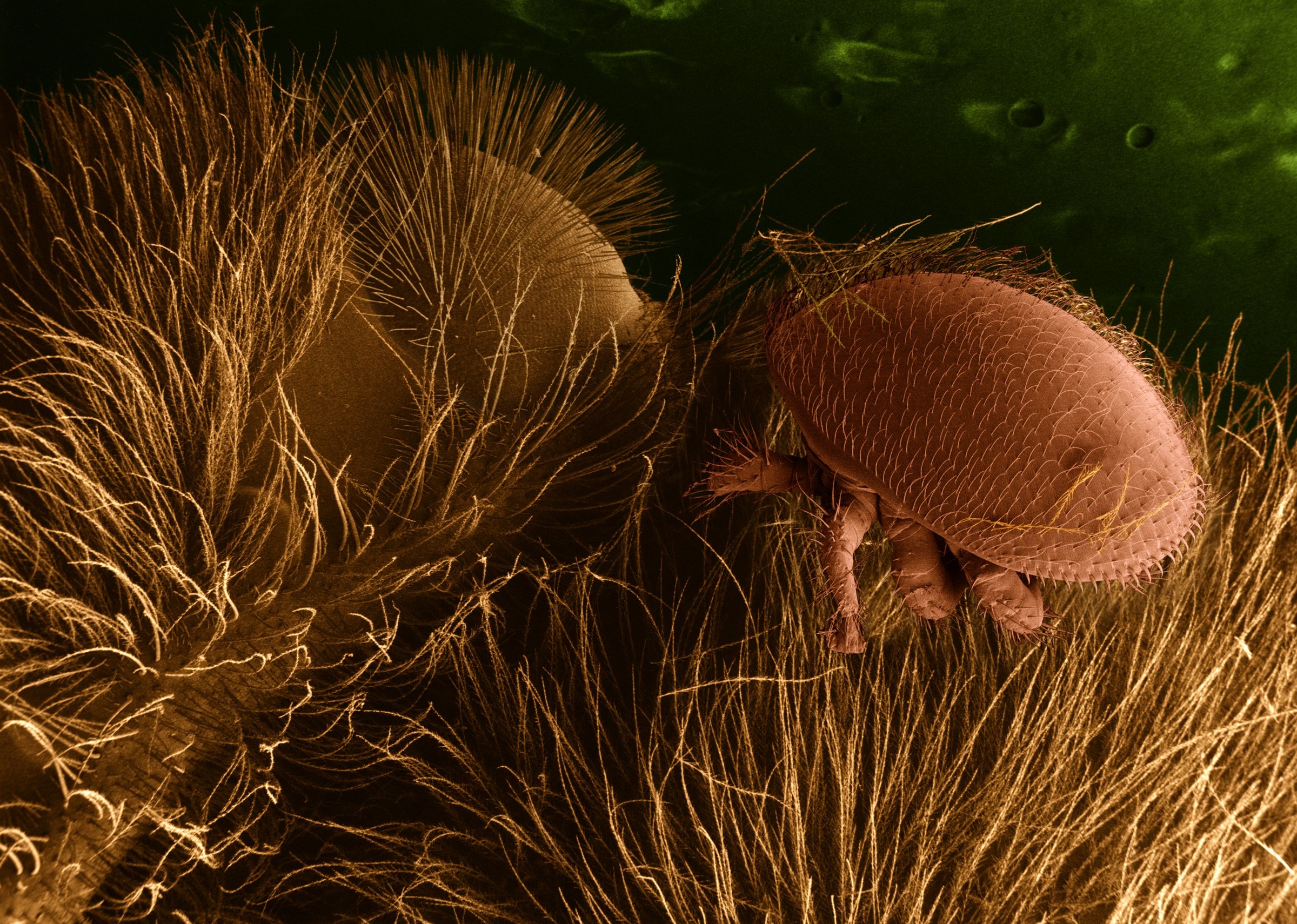

Pheromones in guys’ and girls’ sweat can help give a clue as to their gender for the heterosexual members of the opposite sex, or for homosexual people of the same sex. In one experiment, smelling the male hormone androstadienone made it easier for women and homosexual men to identify a ‘gender neutral’ light point walker as ‘male’. By contrast, the smell of the female hormone estratetraenol made it easier for heterosexual men to identify a walk as ‘female’. The responses of bisexual people and homosexual women to the smell of estratetraenol were, on average, between those of heterosexual men and women. So a sense of smell can alert us generally to members of the sex we find more attractive.

In fact, a person’s smell may even determine how attractive they are to potential mates. Major Histocompatibility Complex (MHC) molecules are produced by the immune system and detect chemical labels on the surface of all cells, bacteria and infected cells. They determine whether a cell belongs to you, or to a pathogen (a parasite or bacterium) or an infected cell. If an MHC-carrying cell comes across anything dodgy, they initiate the immune response to get rid of the pathogen/diseased cell. What’s amazing is that the genes that code for MHC molecules are the most variable of any of the sets of genes in the genome. That is, most of the genetic differences between any two people are found in the MHC genes.

Fascinatingly, the level of MHC similarity between two people may influence how attractive they find one another – and that this is mediated by a sense of smell. A study from 1995 discovered that women found the smell of a T-shirt worn for two days by men who had more dissimilar MHC genes to them more attractive than the smell of men with more MHC alleles in common with their own. (Weirdly, women on the contraceptive pill showed more or less the opposite effect, implying the pill could either affect sense of smell, or choice of mate). This study led to a whole load of hypotheses about how smelling out potential mates’ MHC molecules could prevent inbreeding between genetically similar people, or increase their potential babies’ defences against infections.

What’s more, babies use smell to identify their mothers, in order to start suckling. Whereas people previously thought that babies start suckling in response to their mothers’ pheromones – which are perceived by a different sensory organ to that of regular ‘smelling’ – instead experiments in mice suggest that babies learn the unique smell of their mother. This is interesting because it means that babies don’t instinctively know the scent of their mother through the pheromone system, which is innately programmed; rather, recognising ‘mum’ must be learned in order for the baby to start suckling and therefore survive.

Sniffing out foes and illness

A recent report from a neuroscience lab at McGill University suggests that laboratory mice used in pain research respond differently depending on the sex of the researcher. Specifically, male mice showed more signs of stress when handled my male researchers, and this stress response interfered with the feelings of pain that the researchers were trying to measure. This means that the mice appeared to feel less pain whenever male researchers were around. In fact, this happened not only when the male researchers were around, but also when the male mice were given bedding that carried the scent of the male researchers, or other male mammals that were unfamiliar to the mice. So different smells can affect stress, pain and the smells that cause these responses aren’t necessarily specific to other animals of the same species.

Your sense of smell may also help you to avoid catching illnesses. When people are injected with a substance called lipopolysaccharide (LPS) they get a fever as if they have an infection, and have an increase in immune-system chemicals called cytokines in their blood. In one experiment, people were asked to rate the smell of T-shirts that had been worn by people who were injected with LPS, or saline (which wouldn’t have the same effect on the immune system but would involve an injection – something which can make some people sweat!). Individuals tended to rate the LPS group’s T-shirts as less pleasant, more intense and ‘less healthy’ than the control groups’ T shirts. Amazingly, these T-shirts were only worn for 4 hours, and the ratings of ‘unhealthy’ smells correlated with the level of cytokines in the T-shirt wearer’s blood.

So our sense of smell can guide us towards people we might have more biological ‘chemistry’ with, and guards us from sick people (and male scientists!). But why is knowing about our sense of smell useful? Well, in addition to there being a condition where individuals can’t smell anything (called anosmia), there’s some evidence that people with other, more common, disorders have deficient senses of smell. For instance, psychopaths – who show less empathy, and tend to be more manipulative and callous than other individuals – have a reduced ability to identify or discriminate smells. People with depression are also less able to smell faint odours than non-depressed people. People who are more at risk from developing schizophrenia misidentify different smells. Knowing about how our sense of smell relates to the rest of brain function, might help scientists to develop ways of diagnosing, treating or measuring improvement in disorders where this crucial sense goes wrong.

Post by Natasha Bray

Can you patent a gene? – Association for Molecular Pathology v.

Can you patent a gene? – Association for Molecular Pathology v.

Slipping a disc is, by all accounts, excruciating, but it usually starts to heal by 6-8 weeks. However, someone can be diagnosed with chronic back pain (CBP) when the pain doesn’t subside after three months. Trouble is, this happens all too often, with an estimated 4 million people in the UK suffering from CBP at some point in their lives. The cost of CBP to the NHS is about £1 billion per annum. This doesn’t even cover lost working hours or the loss of livelihood suffered. Treatment usually focuses on relieving pain, preventing inflammation and more recently, cognitive behavioural therapy to treat the patient’s psychology, especially if the organic, physical cause of the pain is no longer obvious.

Slipping a disc is, by all accounts, excruciating, but it usually starts to heal by 6-8 weeks. However, someone can be diagnosed with chronic back pain (CBP) when the pain doesn’t subside after three months. Trouble is, this happens all too often, with an estimated 4 million people in the UK suffering from CBP at some point in their lives. The cost of CBP to the NHS is about £1 billion per annum. This doesn’t even cover lost working hours or the loss of livelihood suffered. Treatment usually focuses on relieving pain, preventing inflammation and more recently, cognitive behavioural therapy to treat the patient’s psychology, especially if the organic, physical cause of the pain is no longer obvious.

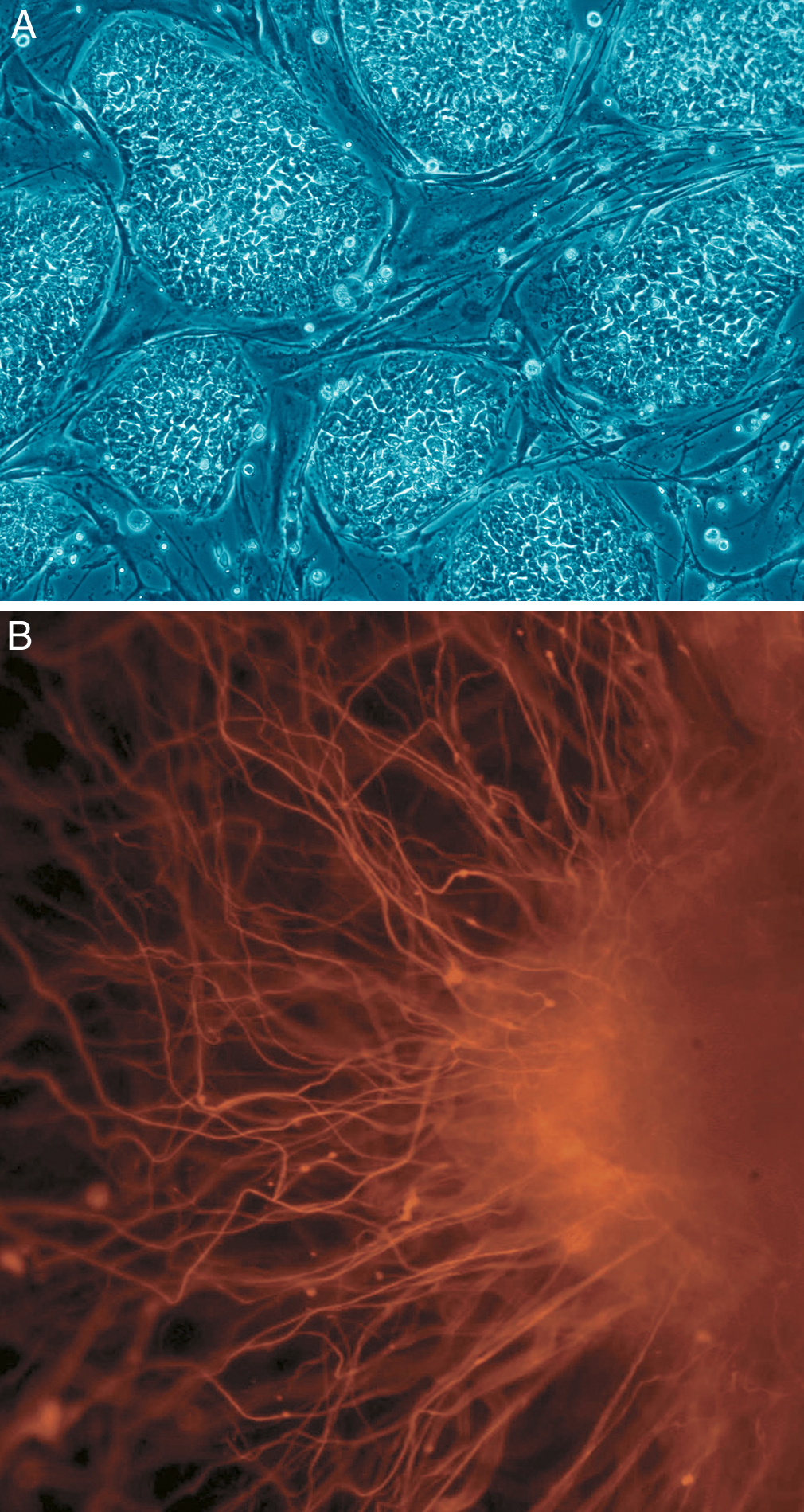

There are, however, candidate techniques that could be improved or perhaps combined. Imaging techniques, including optical,

There are, however, candidate techniques that could be improved or perhaps combined. Imaging techniques, including optical,  I see the BRAIN initiative as a very worthy cause, a good example of aspirational ‘big science’ and a great endorsement for future neuroscience. One gripe I have with it, however, is that it seems a little like Obama’s catch-up effort in response to Europe’s Human Brain Project (HBP). The HBP involves 80 institutions striving towards creating a complex computer infrastructure powerful enough to mimic the human brain, right down to the molecular level. Which begs the question: surely in order to build an artificial brain you need to understand how it’s put together in the first place? I really hope that the BRAIN initiative and Human Brain Project put their ‘heads together’ to help each other in untangling the complex workings of the brain.

I see the BRAIN initiative as a very worthy cause, a good example of aspirational ‘big science’ and a great endorsement for future neuroscience. One gripe I have with it, however, is that it seems a little like Obama’s catch-up effort in response to Europe’s Human Brain Project (HBP). The HBP involves 80 institutions striving towards creating a complex computer infrastructure powerful enough to mimic the human brain, right down to the molecular level. Which begs the question: surely in order to build an artificial brain you need to understand how it’s put together in the first place? I really hope that the BRAIN initiative and Human Brain Project put their ‘heads together’ to help each other in untangling the complex workings of the brain.

You must be logged in to post a comment.