The end of a year is always a good time to reflect on your life; to see what you’ve achieved and plan for the year ahead. For example: this year I attempted to cycle 26 miles in a single day across the Peak District, despite having not used a bike since the 90s. Next year I intend to buy padded shorts before I even go near a bicycle. Oh and I also intend to get a PhD, but that’s no biggie (gulp).

But what sort of year has it been for the relationship between science and the public? I’d say it’s been a good one.

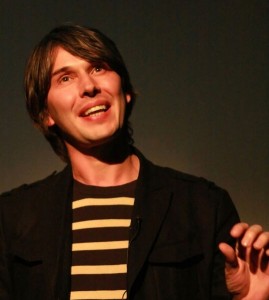

It may just be that I’m more aware of the science-communication world, having finally given in and joined Twitter, but I can sense that science is losing its reputation as a refuge for the über-nerdy and slowly working its way into the mainstream. Scientific stories and issues are being reported more frequently in the media and there appears to have been a dearth of science-related shows on TV. Whether it’s Professor Brian Cox pointing at the sky, the US-based shenanigans of the nerds in the Big Bang Theory or priests taking ecstasy in the name of science live on Channel 4, science certainly seems to be taking centre stage.

This year appears to have brought about a shift in attitude towards science and scientists. This change has no doubt been helped by personalities such as Professor Brian Cox, Professor Alice Roberts and astrophysicist-turned-comedian Dara O’Briain. They have helped make science a bit cooler, a bit more interesting and above all a bit more accessible. Perhaps because of this, the viewing public now seem more willing to adopt a questioning and enquiring attitude towards the information they are given. We now often hear people asking things like: how did they find that out, where is the evidence for this claim, how many people did you survey to get that result and has that finding been replicated elsewhere?

This year appears to have brought about a shift in attitude towards science and scientists. This change has no doubt been helped by personalities such as Professor Brian Cox, Professor Alice Roberts and astrophysicist-turned-comedian Dara O’Briain. They have helped make science a bit cooler, a bit more interesting and above all a bit more accessible. Perhaps because of this, the viewing public now seem more willing to adopt a questioning and enquiring attitude towards the information they are given. We now often hear people asking things like: how did they find that out, where is the evidence for this claim, how many people did you survey to get that result and has that finding been replicated elsewhere?

The effect of this popularity, at least amongst the younger generation, can be seen in university applications. Despite an overall drop across the board in applications, the drop in students applying to study science has been much lower than for other subjects. Applications for biological sciences dropped a mere 4.4% compared to subjects such as non-European languages which fell 21.5%. Biological science was also the fourth most popular choice; with 201,000 applicants, compared to 103,000 for Law (which dropped 3.8%). Physical science, which is in vogue at the moment mostly due to the aforementioned Prof. Cox and the Big Bang Boys, fell by a measly 0.6%. (Source).

However, it’s not just the public who have shown a shift in their attitudes. Scientists are getting much better at communicating their views and discoveries. There is now a huge range of science blogs managed and maintained by scientists at all stages of their careers (for some outstanding examples see here). Twitter is also stuffed full of people who have “science communicator” in their bio, ranging from professional communicators such as Ed Yong to PhD students and science undergraduates. This increase in willingness of scientists to communicate their work has probably helped contribute in a big a way to the shift in public feeling towards scientists. They are proving that, contrary to the stereotype, scientists are perfectly able to communicate and engage with their fellow humans.

However, it’s not just the public who have shown a shift in their attitudes. Scientists are getting much better at communicating their views and discoveries. There is now a huge range of science blogs managed and maintained by scientists at all stages of their careers (for some outstanding examples see here). Twitter is also stuffed full of people who have “science communicator” in their bio, ranging from professional communicators such as Ed Yong to PhD students and science undergraduates. This increase in willingness of scientists to communicate their work has probably helped contribute in a big a way to the shift in public feeling towards scientists. They are proving that, contrary to the stereotype, scientists are perfectly able to communicate and engage with their fellow humans.

Universities are also showing a shift in attitude towards better communication between scientists and the rest of the world. The University of Manchester has now introduced a science communication module to its undergraduate Life Sciences degree courses and several universities offer specialised master’s degrees in science communication. Also people who are already involved in science communication, such as the Head of BBC Science Andrew Cohen, are touring universities giving lectures on how to get a science-related job in the media. This means that current undergraduates and PhD students are learning to communicate their discoveries alongside actually making them.

So what does this mean for the coming year? Our declining interest in singing contests and structured reality shows appears to be leaving a void in our celebrity culture. Will our new-found enthusiasm for ‘nerds’ mean that scientists could fill this gap? Will Brian Cox replace Kate Middleton as the most Googled celebrity in 2013? Will we see ‘The Only Way is Quantum Physics’ grace our screens in the new year?

The answer to these questions is still likely to be no. Whilst science does seem to be in vogue at the moment scientists themselves don’t often seek out the limelight, perhaps due to their already large workload or the fact that being in the public eye does not fit with their nature. Figures such as Brian Cox and Dara O’Briain are exceptions to the “scientists are generally shy” rule; both being famous prior to their scientific debuts (as a keyboardist in an 80’s group and a stand-up comedian, respectively).

Another reason that scientists aren’t likely to be the stars of tomorrow is that science communication is notoriously hard. Scientists have to condense amazingly complex concepts into something someone with no scientific background can easily understand. Many scientists are simply unwilling to reduce their work to this level, arguing that these explanations are too ‘dumbed down’ therefore miss the subtlety necessary to really understand the work. Unfortunately if communicators are unable to simplify their explanations it often leaves the rest of the population (myself included if it’s physics) scratching their heads. As a cell biologist, I find the hardest aspect of communicating my work is knowing what level I’m pitching at – would the audience understand what DNA is? A cell? A mitochondrion? You want to be informative without being either confusing or patronising which is incredibly hard and not a lot of people can do it well. This doesn’t mean that scientists won’t try and get their voice heard though. It may just be that they achieve this through less “in your face” media such as Facebook or Twitter, rather than via newspapers/magazines or on the TV.

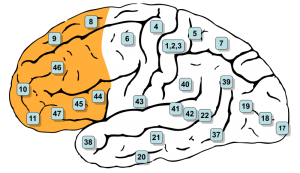

Another reason that scientists aren’t likely to be the stars of tomorrow is that science communication is notoriously hard. Scientists have to condense amazingly complex concepts into something someone with no scientific background can easily understand. Many scientists are simply unwilling to reduce their work to this level, arguing that these explanations are too ‘dumbed down’ therefore miss the subtlety necessary to really understand the work. Unfortunately if communicators are unable to simplify their explanations it often leaves the rest of the population (myself included if it’s physics) scratching their heads. As a cell biologist, I find the hardest aspect of communicating my work is knowing what level I’m pitching at – would the audience understand what DNA is? A cell? A mitochondrion? You want to be informative without being either confusing or patronising which is incredibly hard and not a lot of people can do it well. This doesn’t mean that scientists won’t try and get their voice heard though. It may just be that they achieve this through less “in your face” media such as Facebook or Twitter, rather than via newspapers/magazines or on the TV.

My hope is that the current increased interest in science may help shape the type of celebrity culture we see gracing our screens. It may also help to get across the concept that maybe it’s OK to be clever and be interested in the world around you. Maybe it’ll even become socially acceptable to be more interested in the Higgs Boson or the inner workings of the cell than seeing someone fall out of Chinawhite with no pants on.

Post by: Louise Walker