It’s a sensible question. You may think that our love of the celestial domain keeps astrophotographers up all night, or maybe it’s because there are so many astronomical targets out there that it takes all night to photograph them. Or maybe because our telescopes are so complicated and delicate it takes us hours to set them up. Well these points may partly explain why I frequently keep my wife awake as I struggle to move my telescope back into the house…often past 4:00am! But there is a deeper reason; one that means almost every astronomical photo you see probably took hours or days of photography to produce. In this post I shall explain, with examples, why I and other poor souls go through this hassle.

Let me begin by saying that during a single night (comprising maybe 4-6 hours of photography time), I certainly do not spend my time sweeping across the night sky snapping hundreds of objects. Instead, I usually concentrate on photographing 1 or 2 astronomical targets – taking more than 40 identical shots of each. In this regard, astrophotography is quite different from other forms of photography. But why do this, what is the benefit of taking so many identical shots? Well, unlike most subjects in front of a camera, astronomical targets are dim…very dim. Many are so dim they are invisible in the camera’s viewfinder. To collect the light from these objects (galaxies, nebulae, star clusters…etc.) you must expose the camera sensor for several minutes per photo, instead of fractions of a second as you would for daytime photography. Unfortunately, when you do this, the resulting image does not look very spectacular – it’s badly contaminated with noise.

These are 3-minute exposures of the Crescent and Dumbbell nebula in the constellations Cygnus and Vulpecula respectively. You can see the nebulae but there is also plenty of noise obscuring faint detail. This noise comes from different sources. The most prevalent being the random way photons strike the camera’s sensor – rather like catching rain drops in a cupped hand, you cannot be sure exactly how many photons or rain drops will be caught at any one time. A second source of noise comes from the fact that a camera does not perfectly read values from its sensor; some pixels will be brighter or dimmer as a result. Finally, a sensor’s pixels measure light within a limited range of values. If the actual value of light intensity for a given pixel is between two of these values then there will be an error in the reading. There are further types of noise in astronomical images such as skyglow, light pollution and thermal noise but these can be dealt with by calibrating the images – a rather complex process I will discuss in a future post!

The best way of dealing with this noise is to take many repeated exposures and combine (stack) them. This method takes advantage of the fact that each photo will differ because of the random noise they contain but critically they will all contain some amount of signal (the detail of the target you photographed). As you combine them, the signal (which is conserved across the pictures) builds in strength, while the noise tends to cancel itself out. The result is an image with more signal and relatively less noise giving more detail than you could ever see in a single photograph. To the left is a good example of the improvement in quality you might expect to see as you stack more photos or frames.

In addition, the bit depth of the image, which is the precision that an image can define a colour, also increases as you stack. For example, it you have a single 3-bit pixel (it can show 2³=8 values, i.e. from 0 to 7) a single image may measure the brightness of a star as 5, but the true value is actually 5.42. In this scenario, taking 10 photos, each giving the star a slightly different brightness value, may give you 5, 5, 6, 5, 7, 6, 4, 5, 5 and 6, the average of these being 5.4 – a more accurate value than the original, single shot, reading. The end result is a photo with lots of subtle detail that fades smoothly into the blackness of space.

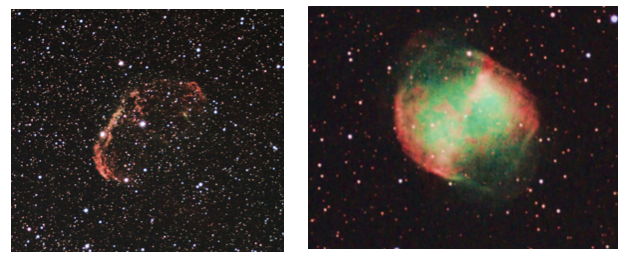

So here are my final images of the Crescent and Dumbbell nebulae after I stacked over 40 frames each taking 3 minutes to capture (giving a total exposure of 2 hours each).

Was it worth being bitten to death by midges, setting my dog off barking at 4am, putting my wife in a bad mood for the whole next day…I think yes!

Post by: Daniel Elijah

Save

Very clear explanation, Daniel, and a powerful counterweight to any canine and spousal objections. But, if intrinsic variation in sensitivity of receptor pixels is a troublesome source of error, would it make sense to make tiny shifts in the field of view of the apparatus between exposures and compensate for this while stacking, or is that too difficult and unlikely to gain anything discernable?

Many thanks Myron for your thoughtful comment. What you are describing is the process of dithering, a well used and very effective method for removing fixed noise in an image. Here’s a link with more detail:

(http://dslr-astrophotography.com/dithering-optimal-results-dslr-astrophotography/)

As you mentioned this method relies on the fact that some pixels are intrinsically more/less sensitive than others, these ‘hot’ and ‘cold’ pixels are fixed in place within the image while actual details of astronomical phenomena can shift if you move the scope. It is by shifting the position of the scope by a few pixels that you can locate and remove these bad pixels. It can be quite difficult to programme the telescope mount to make set movements between exposures, but I know it is possible using eqmod software.

In addition, if you use some (older) DSLRs for astrophotography they can have large blotches or bands in the image that require dithering the scope by 10s of pixels or more. While this is fine, you will have to throw away the pixels at the edge of the image, which is annoying! My old Nikon D200 had this problem.