In this post I am diverting from my usual astrophotography theme and entering the world of computational neuroscience, a subject I studied for almost ten years. Computational neuroscience is a relatively new interdisciplinary branch of neuroscience that studies how areas of the brain and nervous system process and transmit information. An important and still unsolved question in computational neuroscience is how do neurons transmit information between themselves. This is known as the problem of neural coding and by solving this problem, we could potentially understand how all our cognitive functions are underpinned by neurons communicating with each other. So for the rest of this post I will attempt to discuss how we can read the neural code and why the code is so difficult to crack.

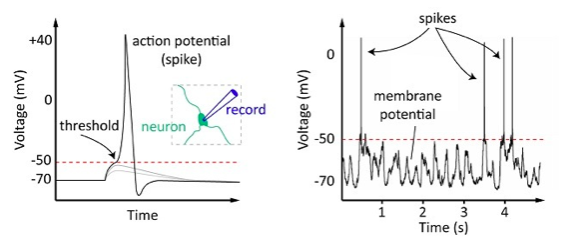

Since the twenties we have known that excited neurons communicate through electrical pulses called action potentials or spikes (see Figure 1). These spikes can quickly travel down the long axons of neurons to distant destinations before crossing a synapse and activating another neuron (form more information on neurons and synapses see here).

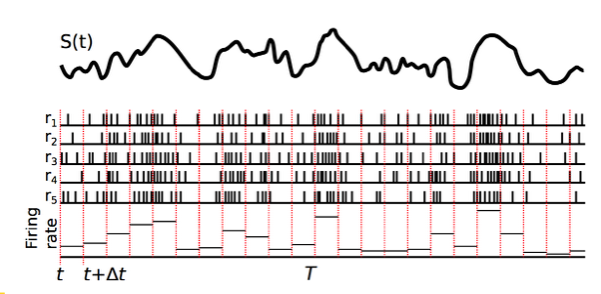

You would be forgiven for thinking that the neural coding problem is solved: neurons fire a spike when the see a stimulus they like and communicate this fact to other nearby neurons, while at other times they stay silent. Unfortunately, the situation is a bit more complex. Spikes are the basic symbol used by neurons to communicate, much like letters are the basic symbols of a written language. But letters only become meaningful when many are used together. This analogy is also true for neurons. When a neuron becomes excited it produces a sequence of spikes that, in theory, represent the stimuli the neuron is responding to. So if you can correctly interpret the meaning of spike sequences you could understand what a neuron is saying. In Figure 2, I show a hypothetical example of a neuron responding to a stimulus.

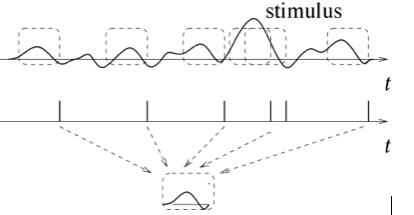

In this example a neuron is receiving an constantly fluctuating input. This is a bit like the signal you would expect to see from a neuron receiving a constant stream of inputs from thousands of other neurons. In response to this stimulus the receiving neuron constantly changes its spike firing rate. If we read this rate we can get a rough idea of what this neuron is excited by. In this case, the neuron fires faster when the stimulus is high and is almost silent when the stimulus is low. There is a mathematical method that can extract the stimulus that produces spikes, known as reverse correlation (Figure 3).

The method is actually very simple; each time a spike occurs we take a sample of the stimulus just before the spike. Hopefully many spikes are fired and we end up with many stimulus samples, in Figure 3 the samples are shown as dashed boxes over the stimulus. We then take these stimulus samples and average them together. If these spikes are being fired in response to a common feature in the stimulus we will be able to see this. This is therefore simple method of finding what a neuron actually responds to when it fires a spike. However, there are limitations to this procedure. For instance, if a neuron responds to multiple features within a stimulus then these will be averaged together leading to a misleading result. Also, this method assumes that the stimulus contains a wide selection of different stimulus fluctuations. If it doesn’t then you can never really know what a neuron is really responding to because you may not have stimulated it with anything it likes!

In my next two posts, I will discuss how more advanced methods from the realms of artificial intelligence and probability theory have helped neuroscientists more accurately extract the meaning of neural activity.

Post by: Daniel Elijah